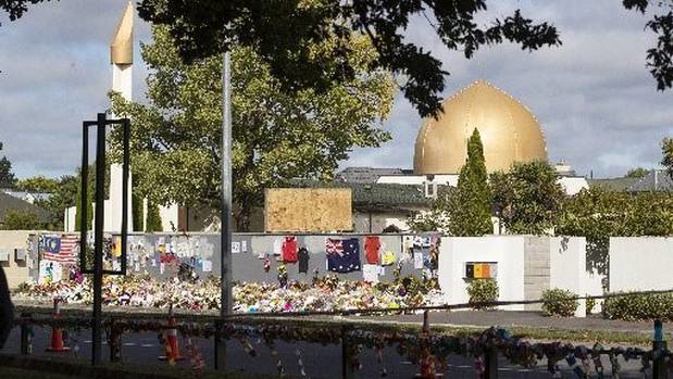

A year on from the Christchurch mosque shootings, raw footage from the alleged gunman's video is still appearing on Facebook and Facebook-owned Instagram.

Eric Feinberg, vice president of the Content Moderation Coalition, has been consistently finding copies of the gunman's clip since the March 15 tragedy last year.

Before he talked to the Herald earlier week (see video above), he was able to locate 14 copies of the clip across Facebook and Instagram.

The New York-based hate content researcher says he has been able to find copies of the clip that have escaped Facebook's filters, in part, because he has run searches for related terms in multiple languages, and used machine-learning tools that have allowed him to locate edits, including those that are cleverly disguised. In one instance, he found four sections of the gunman's video woven into a mock video game.

He has also partial copies of the clip embedded within news reports, which he concedes is a "grey area" in the US - although it's not in New Zealand, where the Chief Censor rated the footage objectionable, meaning it is illegal to view or share (and not just in the abstract.

As the first anniversary of the Christchurch mosque shootings approached, Chris Keall talked to New York-based hate-content researcher and Vice President of the Content Moderation Coalition Eric Feinberg.

In June last year, Christchurch man Philip Neville Arps was sentenced to 21 months for sharing the clip. He was released on January 29 , with a GPS tracker and condition he did not go near the two mosques.)

Facebook says it has beefed up its human and artificial intelligence filters since the Christchurch shootings, and taken steps such as multi-language searches and "listening" for gunshot-like audio (Facebook's policy director for counter-terrorism told US Congress members that its algorithm did not detect the massacre livestream because there was "not enough gore".)

But Feinberg is unimpressed.

"The argument, always hear this that 'because of the systems, we're able to catch perpetrators.' No, you're creating the perpetrators," the researcher says.

"Look what happened in Christchurch. [The gunman] had an agenda. It was premeditated that he was going to go in and do what he did. And because the system existed, he was allowed to broadcast this to the world."

In Feinberg's opinion, there is still too much hate-content on Facebook. He considers the social network leans too heavily on free-speech arguments.

Last week, Martin Cocker - head of NetSafe, the approved government agency under the Harmful Digital Communications Act, struck a similar note, saying: "There has been good progress against violent content on Facebook and other platforms, but there hasn't been a heck of a hot of progress against hate pages that can ignite it."

And Islamic community leader Aliya Danzeisen said although Facebook had made some progress, she felt it still fell on Muslim members of the social network to report hate content.

"I'd like to sit down with Mark Zuckerberg," she said. " I just don't think he realises what the impact is on the average Muslim kid - or adult - of the sustained abuse," she said.

Facebook has declined to follow the Google-owned YouTube's lead in switching-off livestreaming for most users post-Christchurch.

However, it will now suspend or permanently block individual users who violate its policies.

Facebook ANZ policy director Garlick said: "Since March 15, we've made significant changes and investments to advance our proactive detection technology, grow our teams working on safety, and respond even quicker to acts of violence.

"No single solution can prevent hate and violent extremism, but the meaningful progress on the commitments made to the Christchurch Call are delivering real action in New Zealand and internationally."

Facebook had now banned more than 200 white supremacist organisations from its platform, Garlick said.

"When someone searches for something related to hate and extremism in the US – we point them to resources that help people leave behind hate groups.

"Last year, we expanded this to Australia in partnership with Exit Australia, an organisation focused on helping to redirect people away from hate and radicalisation."

Between July and September last year, Facebook says it took down:

- 7 million pieces of hate speech, 80.2 per cent proactively before it was reported (up from 53 per cent this time the year prior).

- 29.3 million pieces of graphic violent content, 98.6 per cent proactively before it was reported.

- 5.2 million pieces of terrorist propaganda, 98.5 per cent proactively before it was reported.

Where to get help:

• Lifeline: 0800 543 354 (available 24/7)

• Whats Up?: 0800 942 8787 (1pm to 11pm)

• Depression helpline: 0800 111 757 (available 24/7)

• Youthline: 0800 376 633

• Kidsline: 0800 543 754 (available 24/7)

If it is an emergency and you feel like you or someone else is at risk, call 111.

Take your Radio, Podcasts and Music with you